We used Optical Character Recognition (OCR) software to convert scans of our print sci-fi books into computer-readable text files. OCR expands the potential uses of our corpus, allowing researchers to not only review scanned image files, but also to search, clean, and analyze digital texts using computational tools.

Input: TIFF Files (Digital scans of science fiction book pages)

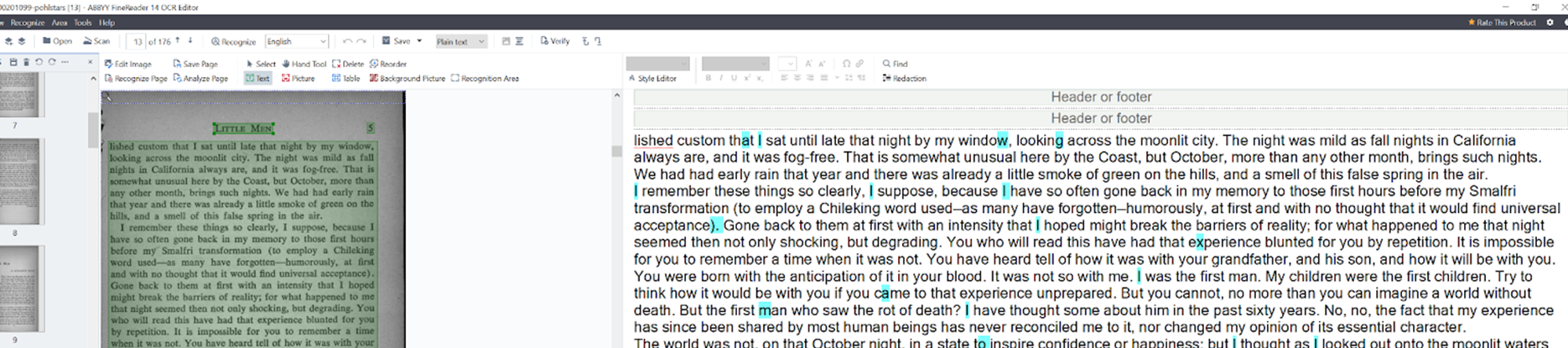

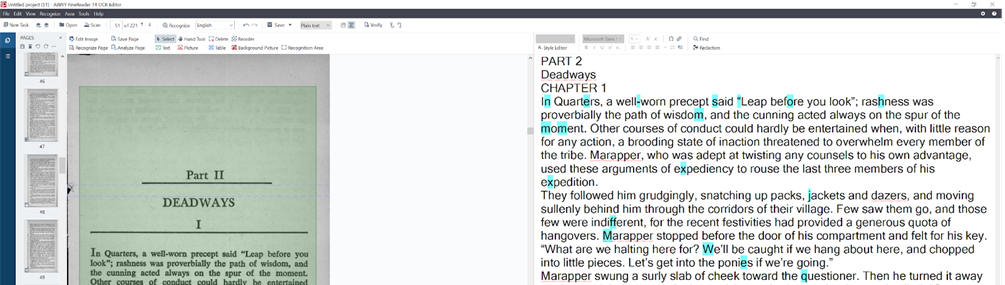

This project made use of ABBYY FineReader, a commercial software which uses AI-based technology to recognize text in image files including TIFFs, PNGs, and PDFs. We uploaded collections of page scans to ABBYY, one book at a time, and performed OCR. We also used ABBYY to assist in the following tasks:

- Removing blank pages

- Reviewing pages with “unrecognized” elements (illustrations, charts, icons and other content that OCR cannot automatically convert)

- Identifying pages that were ripped, blurred, cut off, or otherwise require manual cleaning

- Removing headers, footers and page numbers

- Adding tags denoting START OF BOOK, END OF BOOK, and CHAPTER (each time new section or chapter starts)

Completing the above-mentioned tasks has yielded a collection of minimally cleaned and enriched text files that can be searched and analyzed digitally.

Output: TXT Files (Machine-readable versions of science fiction books)

Because the science fiction collection is under copyright, the exported text files can only be accessed through a visit to the Temple University Loretta C. Duckworth Scholars Studio, where researchers must sign an agreement to only use these materials at the Scholars Studio for non-consumptive research. A dataset of extracted features from these texts is freely available on this website.

See our full workflow for performing OCR on the science fiction corpus on our ABBYY guidelines.

For performing OCR programmatically using Python, see our Pytesseract OCR notebook.

Related Resources

Ingesting Our SF Archive into HathiTrust Digital Library

Temple has ingested over 300 science fiction texts into the HathiTrust Digital Library. Because HathiTrust requires specific file formats for upload, Python code was used to packaged all necessary files for the sf collection. Running the Python ingestion code in the command line generated the following files for upload to HathiTrust:

- Image files (TIFF) for each page of each title (duplicated from server)

- OCR files for each page of each title (generated by Google Tesseract)

- Metadata file (YML) containing administrative and technical metadata

- Checksum file listing every file in package and its corresponding md5 hash value

The ingestion code and associated tutorial are available on our HathiTrust-Ingestion-Scripts Github Repository.

Webscraping

For more information about the webscraping methods we used during this project, visit our Github repository on webscraping.